See my Google Scholar for the most up-to-date list of papers.

2026

-

arXiv

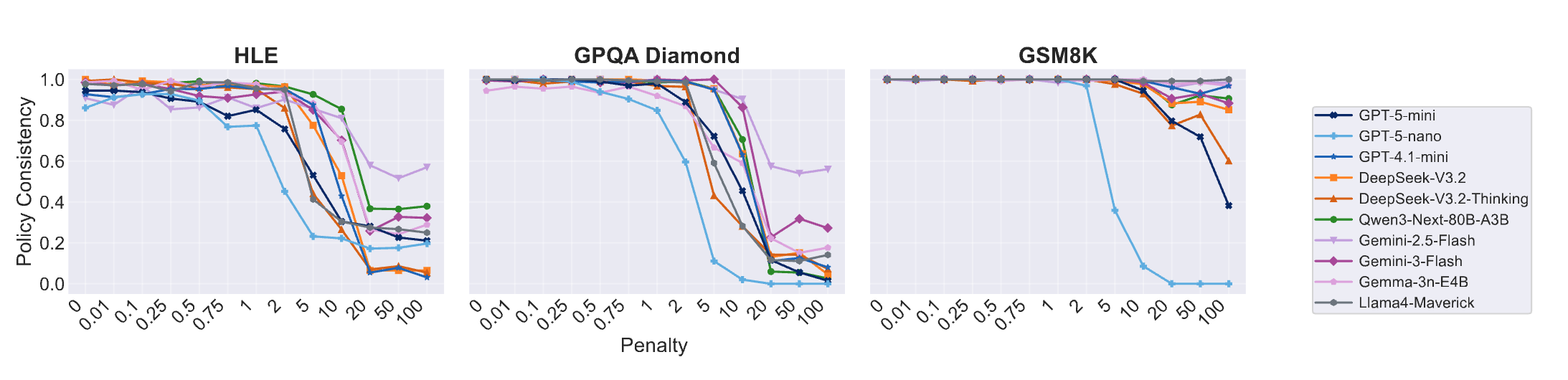

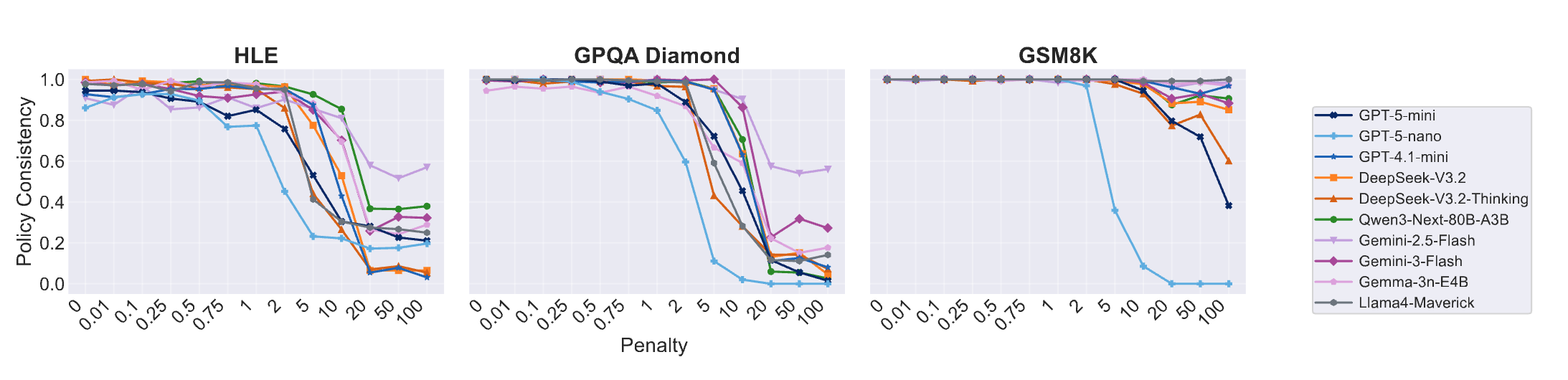

Are LLM Decisions Faithful to Verbal Confidence?

Jiawei Wang , Yanfei Zhou, Siddartha Devic, and Deqing Fu

2026

-

ICLR

Zebra-CoT: A Dataset for Interleaved Vision Language Reasoning

Ang Li*,

Charles Wang*,

Deqing Fu*,

Kaiyu Yue*,

Zikui Cai*,

Wang Bill Zhu*,

Ollie Liu* , Peng Guo

*,

Willie Neiswanger,

Furong Huang,

Tom Goldstein, and

Micah Goldblum In International Conference on Learning Representations (ICLR), 2026

*Equal Contribution

-

ICLR

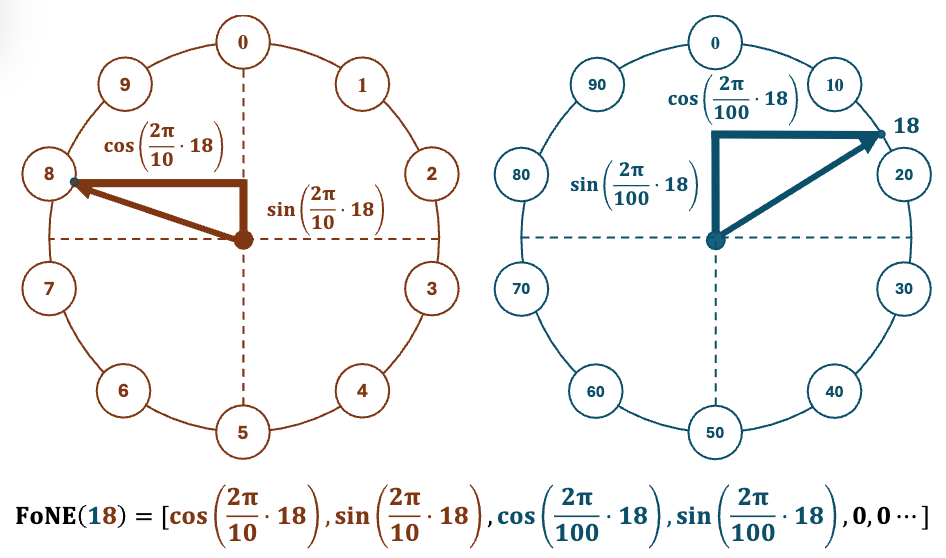

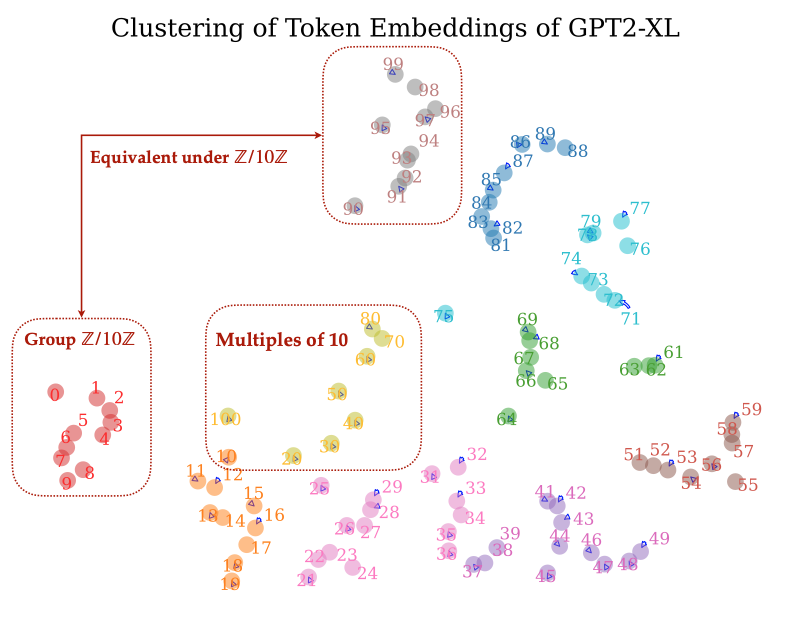

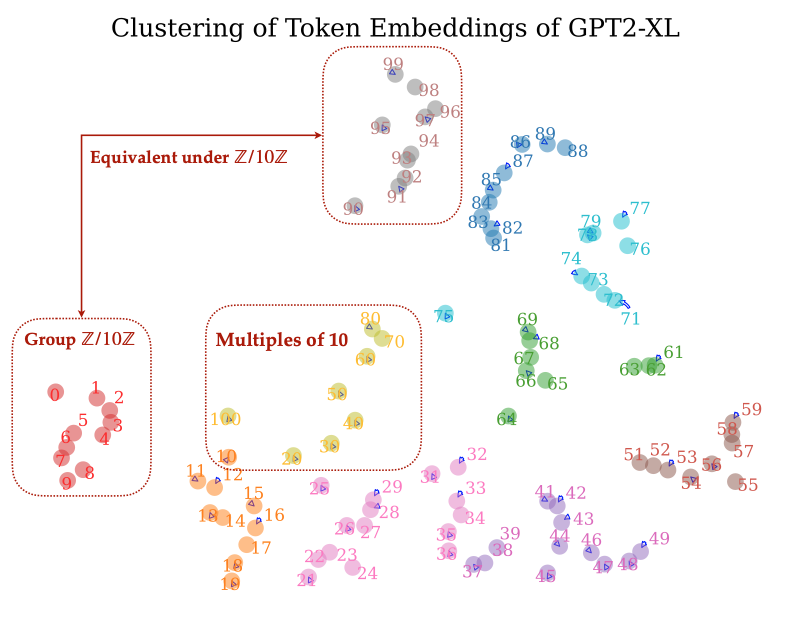

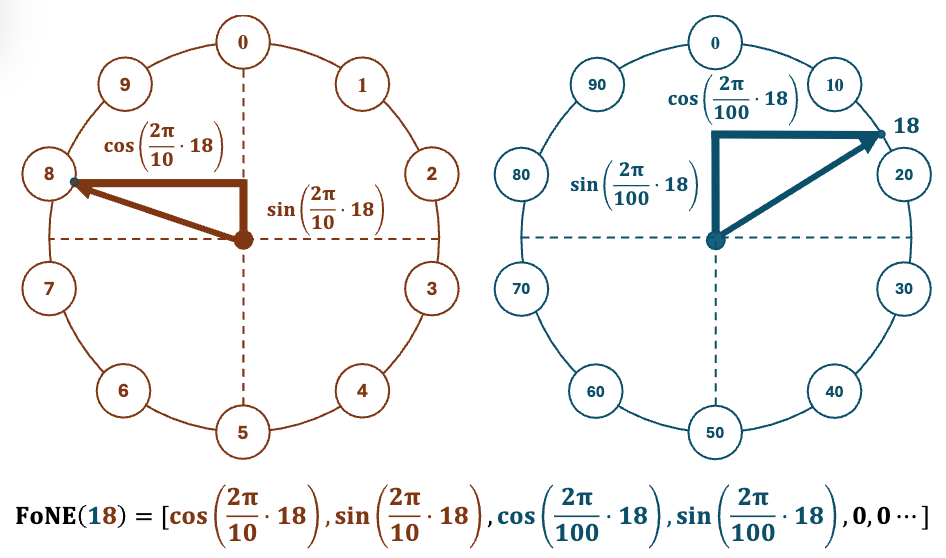

FoNE: Precise Single-Token Number Embeddings via Fourier Features

In International Conference on Learning Representations (ICLR), 2026

2025

-

arXiv

-

arXiv

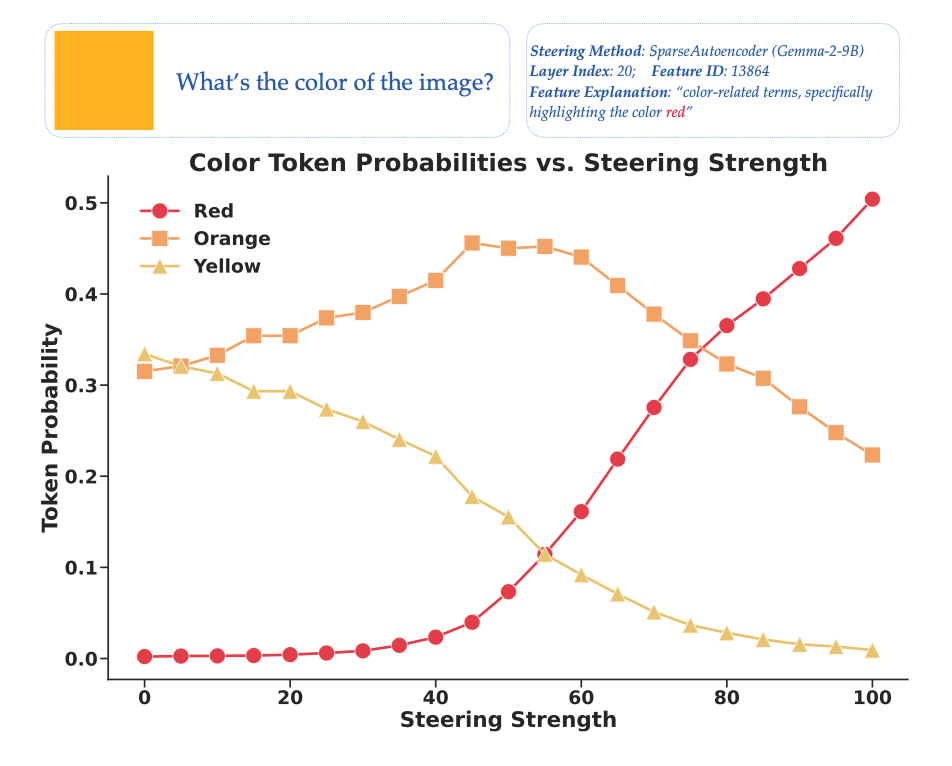

Resa: Transparent Reasoning Models via SAEs

In arXiv, 2025

-

arXiv

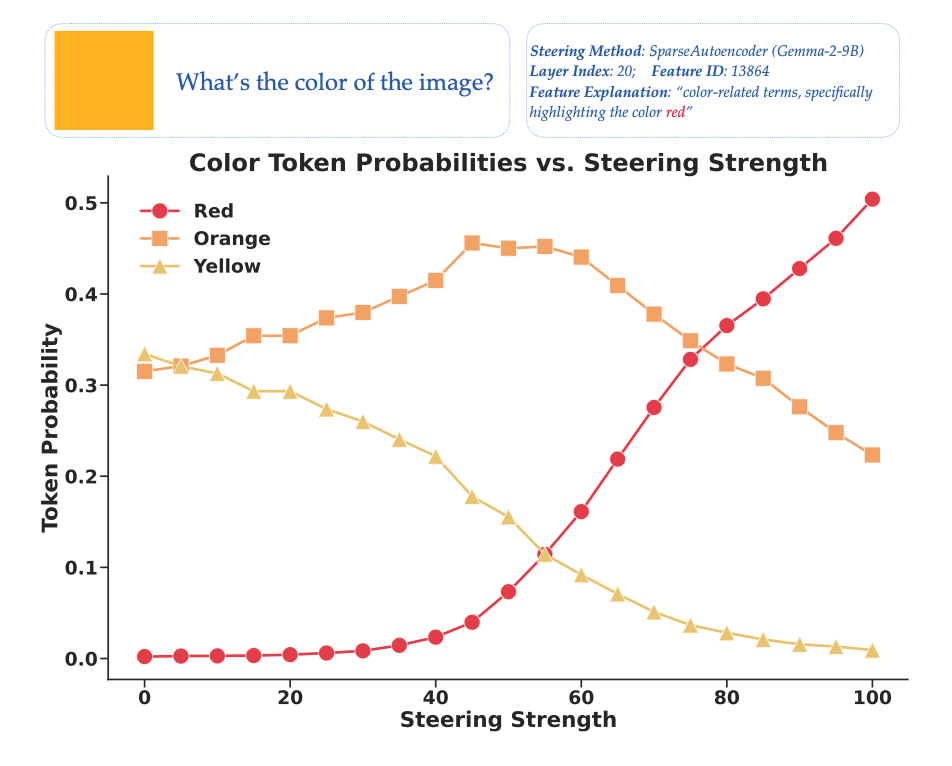

Textual Steering Vectors Can Improve Visual Understanding in Multimodal Large Language Models

In arXiv, 2025

*Equal Contribution

-

NeurIPS

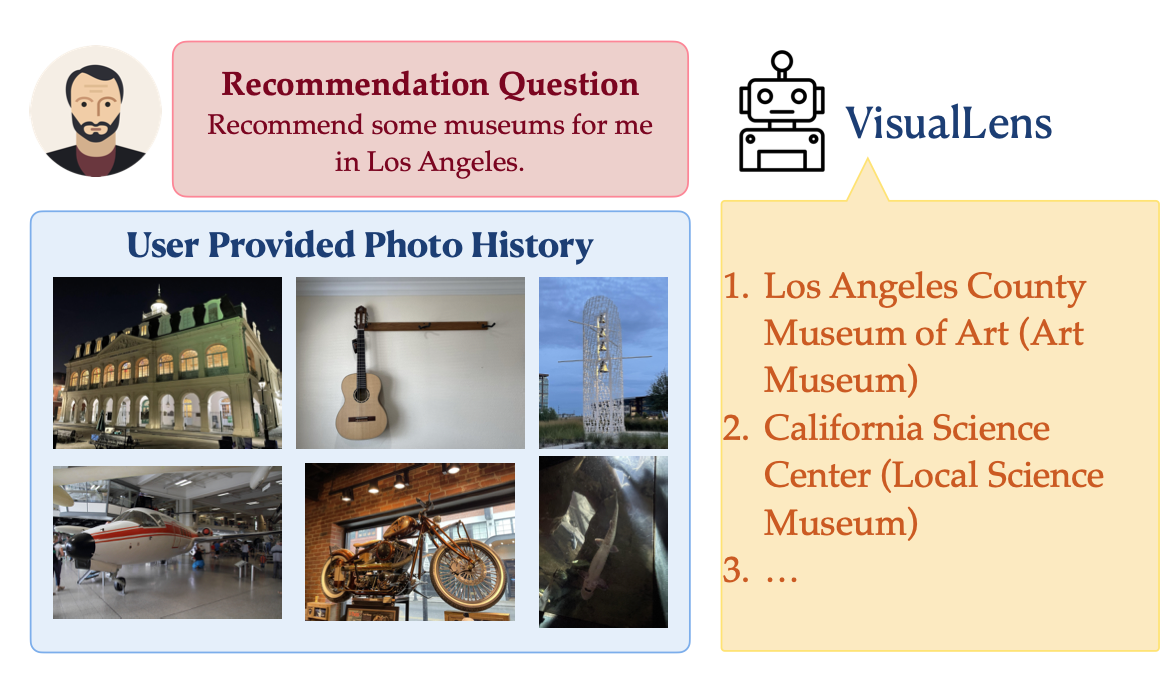

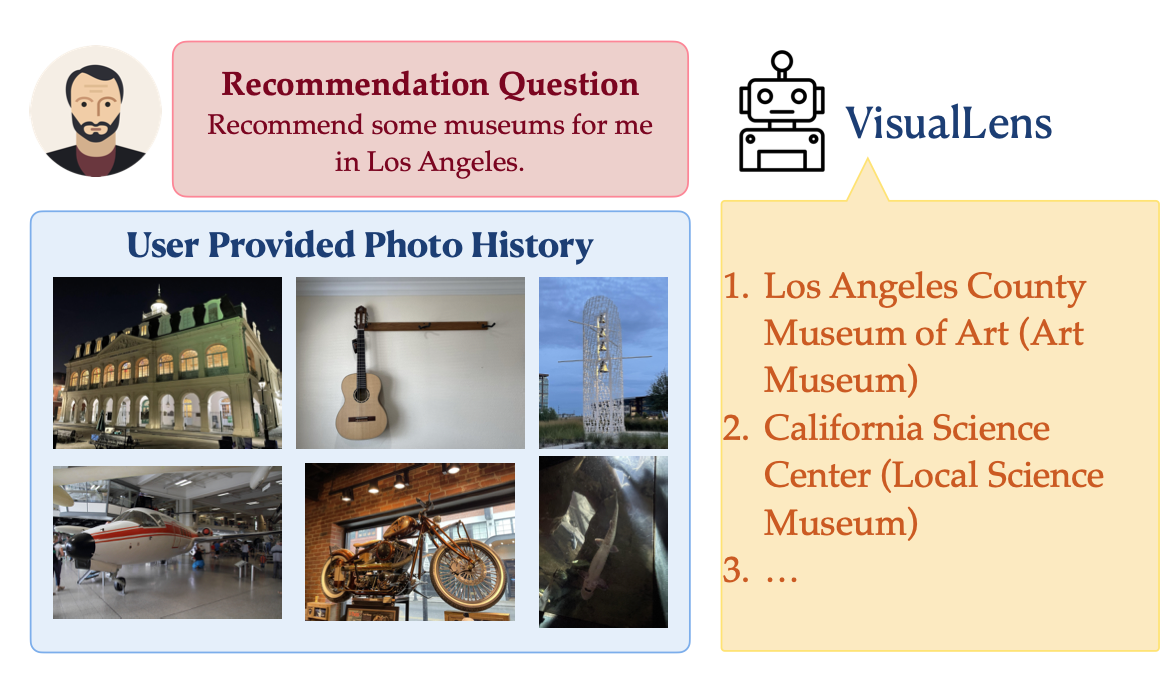

VisualLens: Personalization through Visual History

Wang Bill Zhu,

Deqing Fu , Kai Sun, Yi Lu, Zhaojiang Lin, Seungwhan Moon, Kanika Narang, Mustafa Canim , Yue Liu, Anuj Kumar, and Xin Luna Dong

In Conference on Neural Information Processing Systems (NeurIPS), 2025

-

ICLR

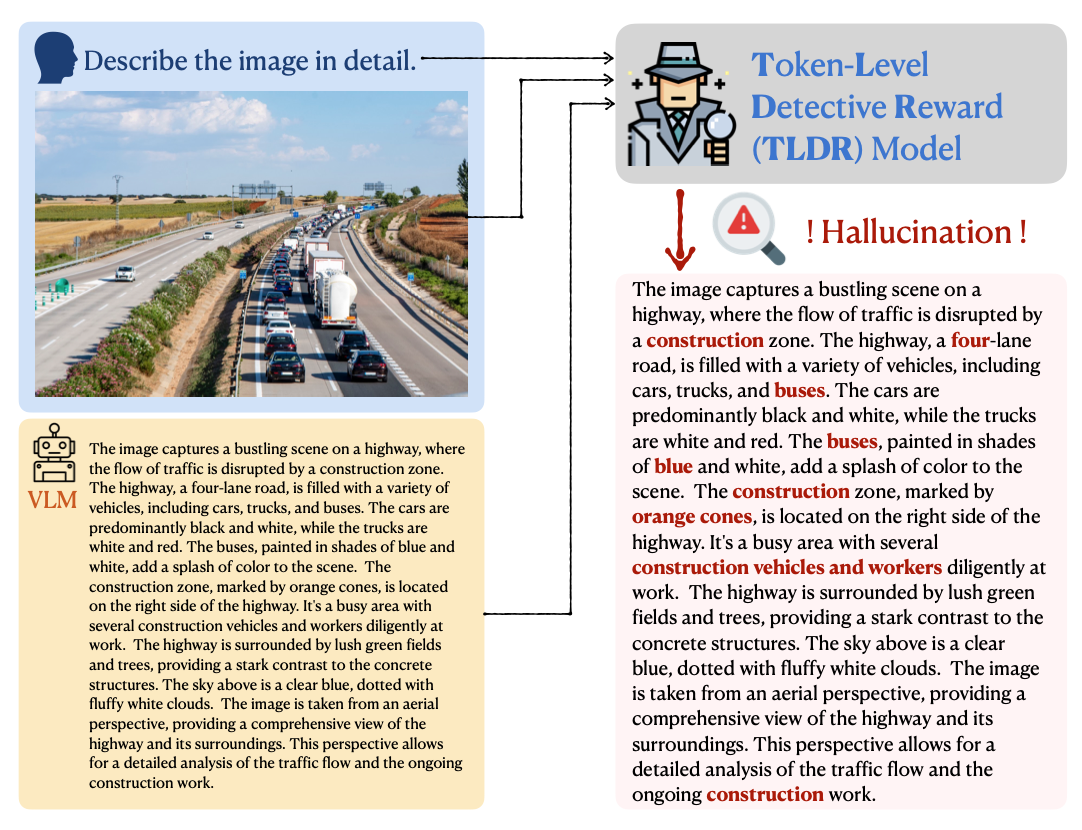

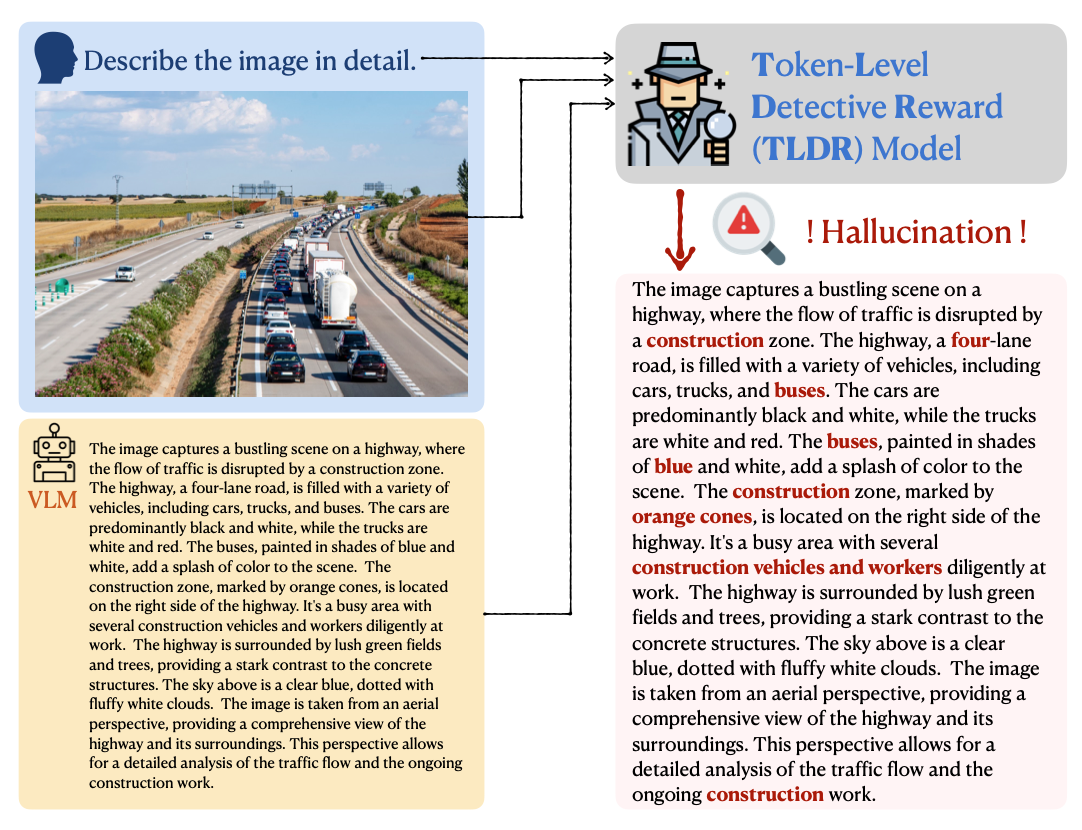

TLDR: Token-Level Detective Reward Model for Large Vision Language Models

Deqing Fu, Tong Xiao , Rui Wang,

Wang Zhu, Pengchuan Zhang, Guan Pang,

Robin Jia, and Lawrence Chen

In International Conference on Learning Representations (ICLR), 2025

-

ICLR

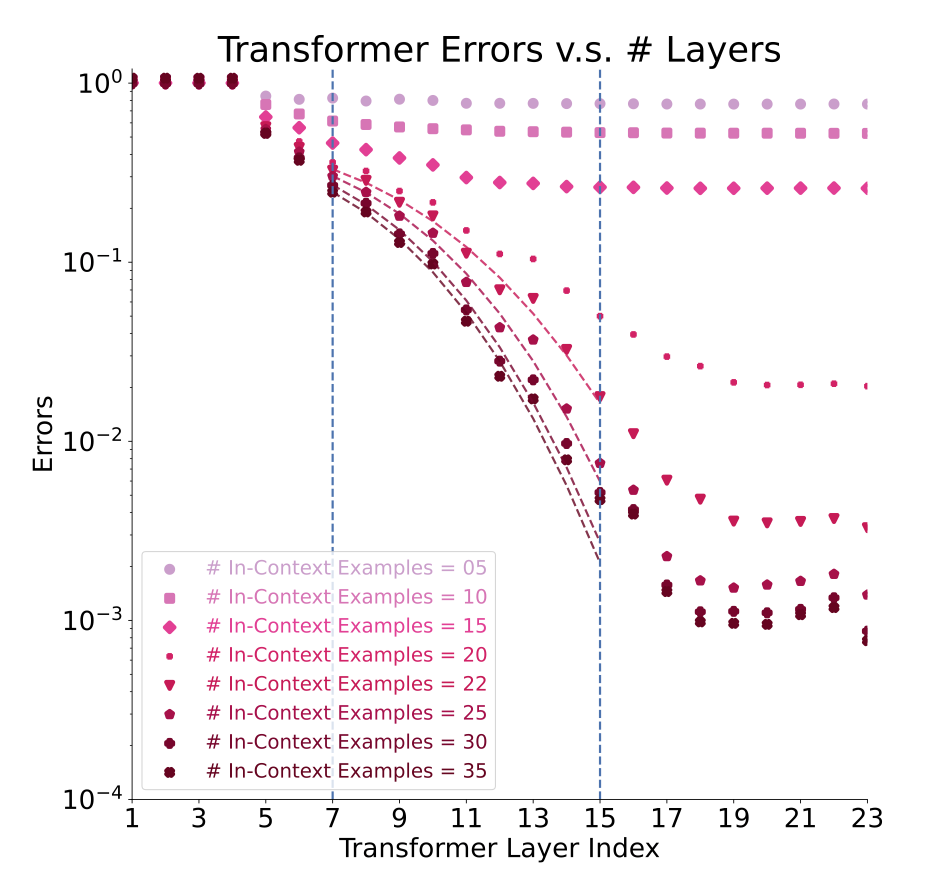

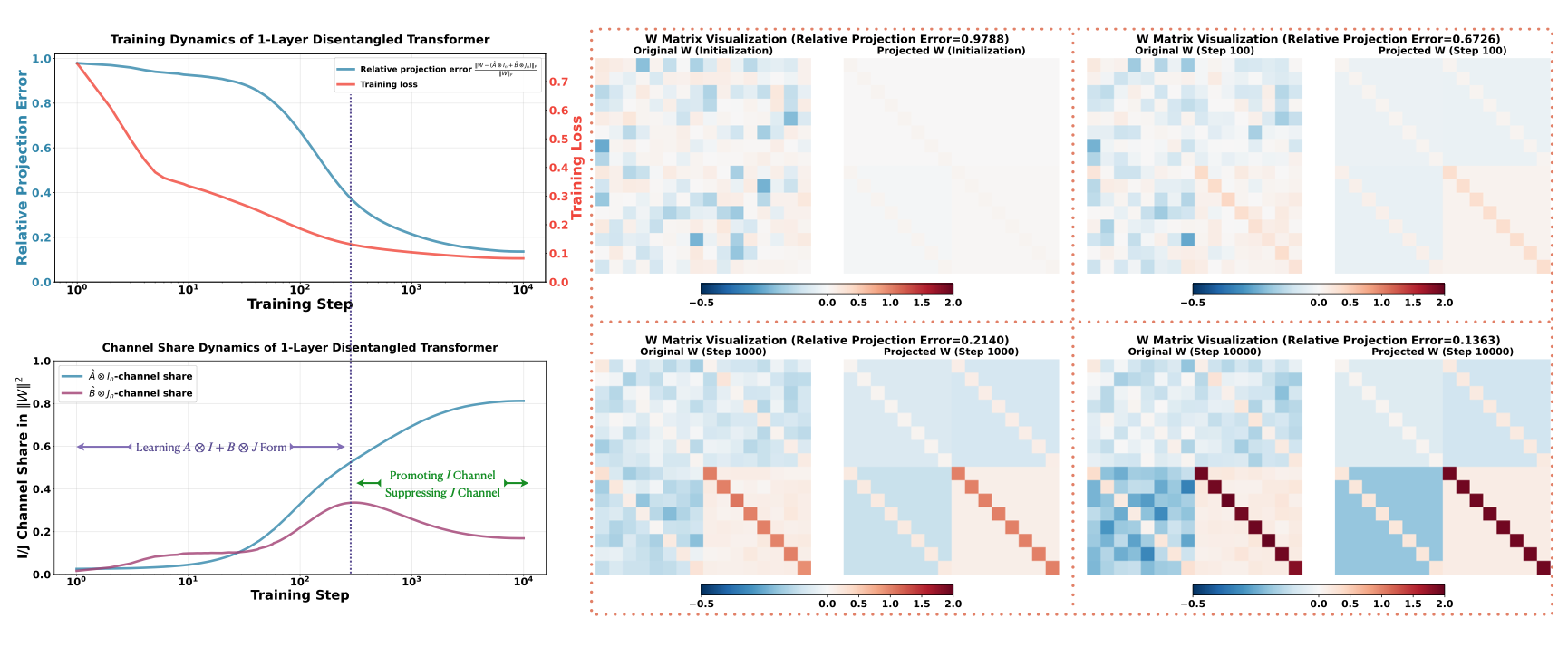

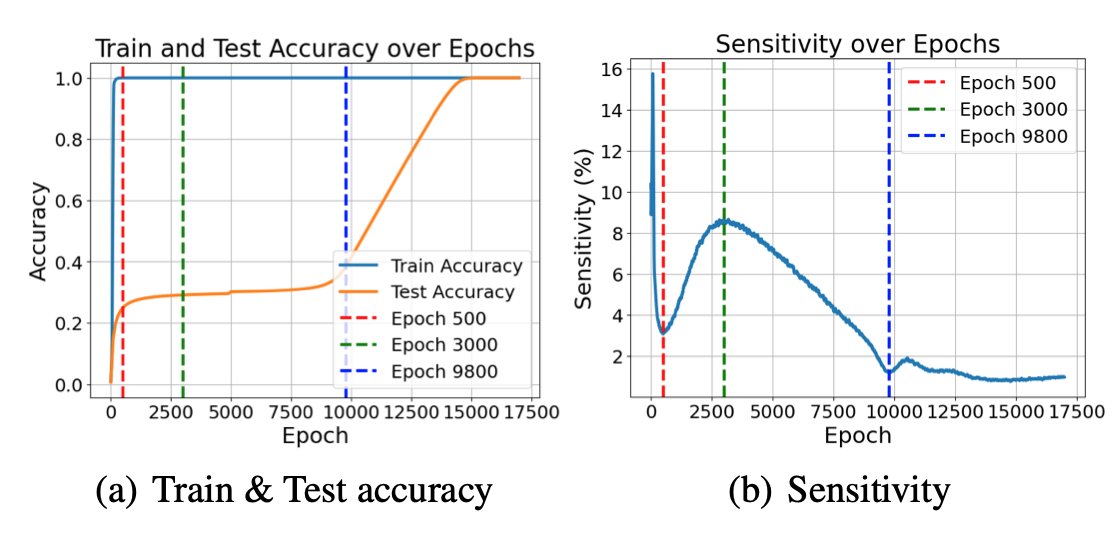

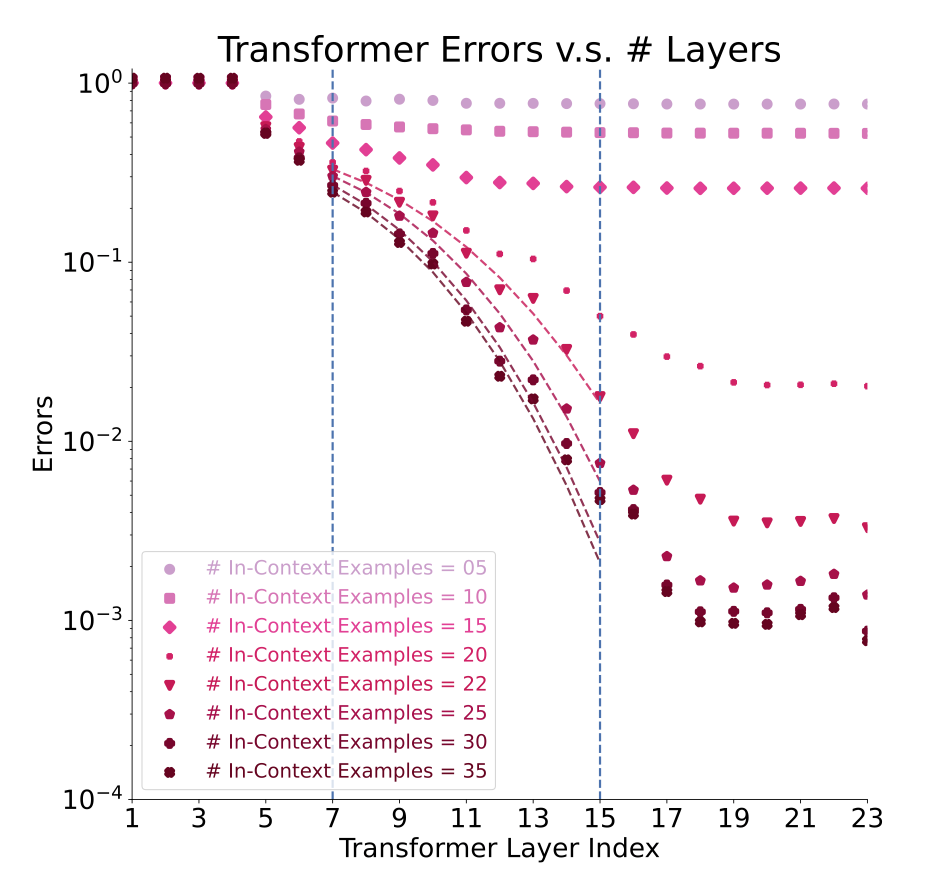

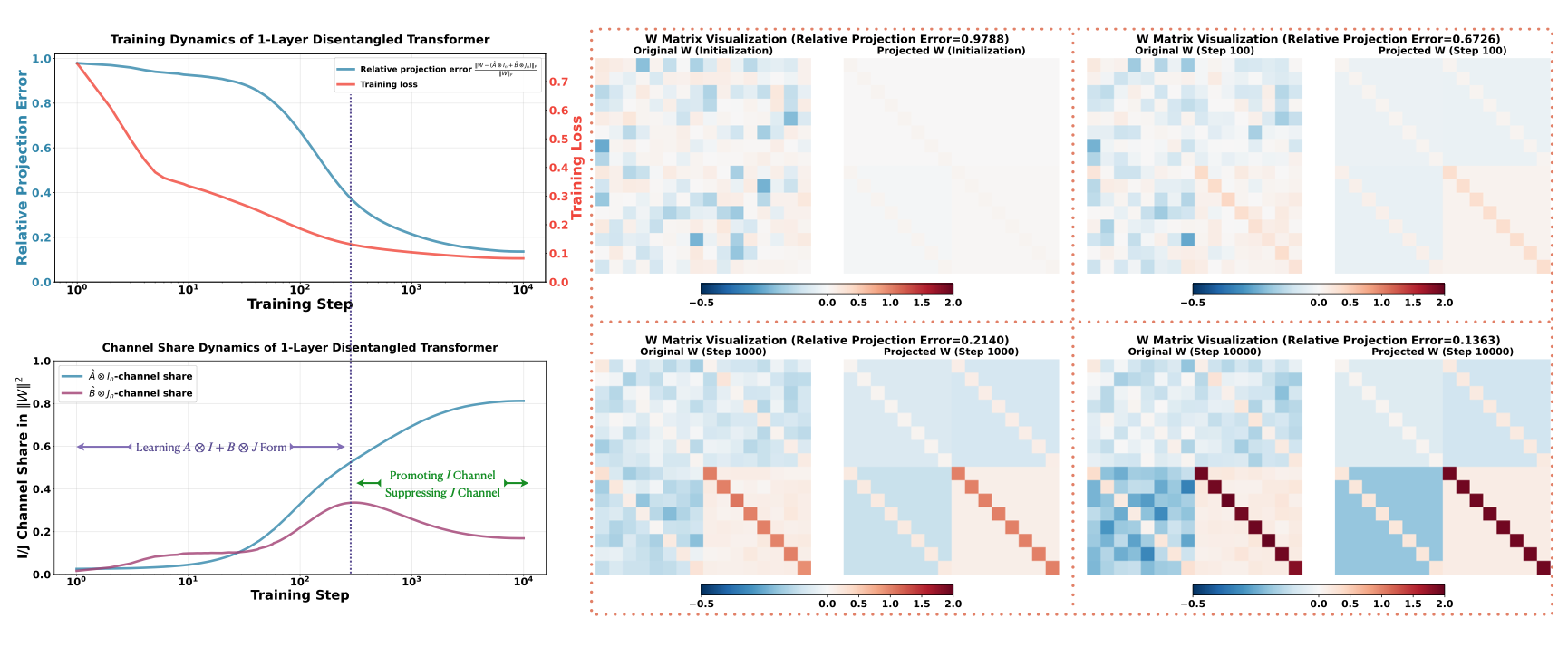

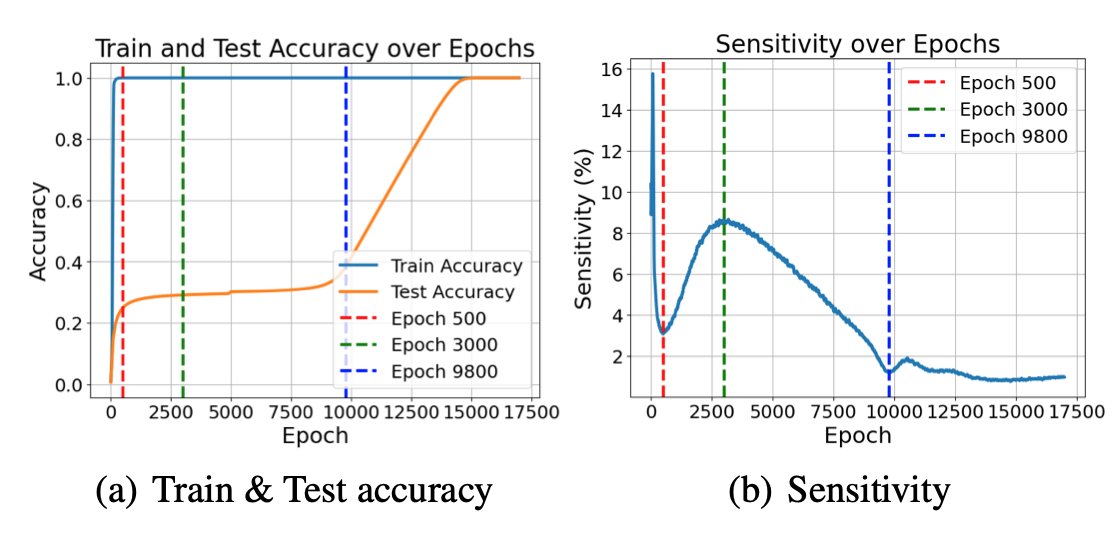

Transformers Learn Low Sensitivity Functions: Investigations and Implications

In International Conference on Learning Representations (ICLR), 2025

*Equal Contribution

-

ICLR

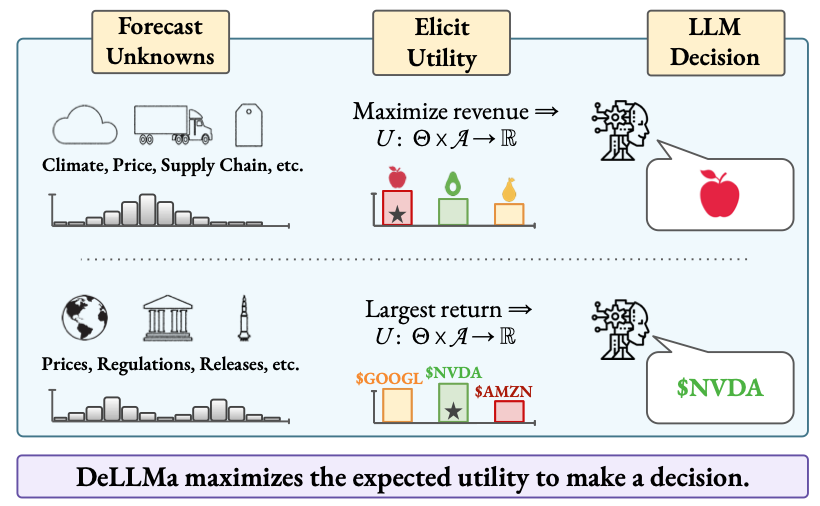

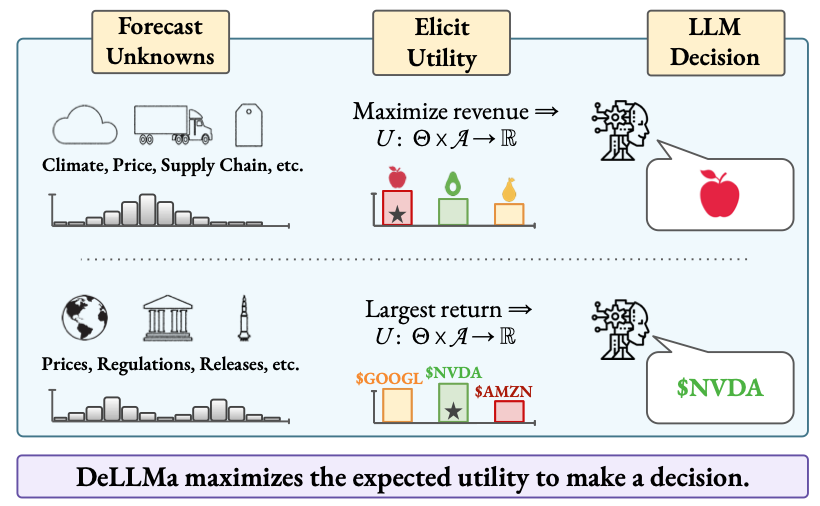

DeLLMa: Decision Making Under Uncertainty with Large Language Models

In International Conference on Learning Representations (ICLR), 2025

Spotlight (Top 5.1%), *Equal Contribution

-

NAACL

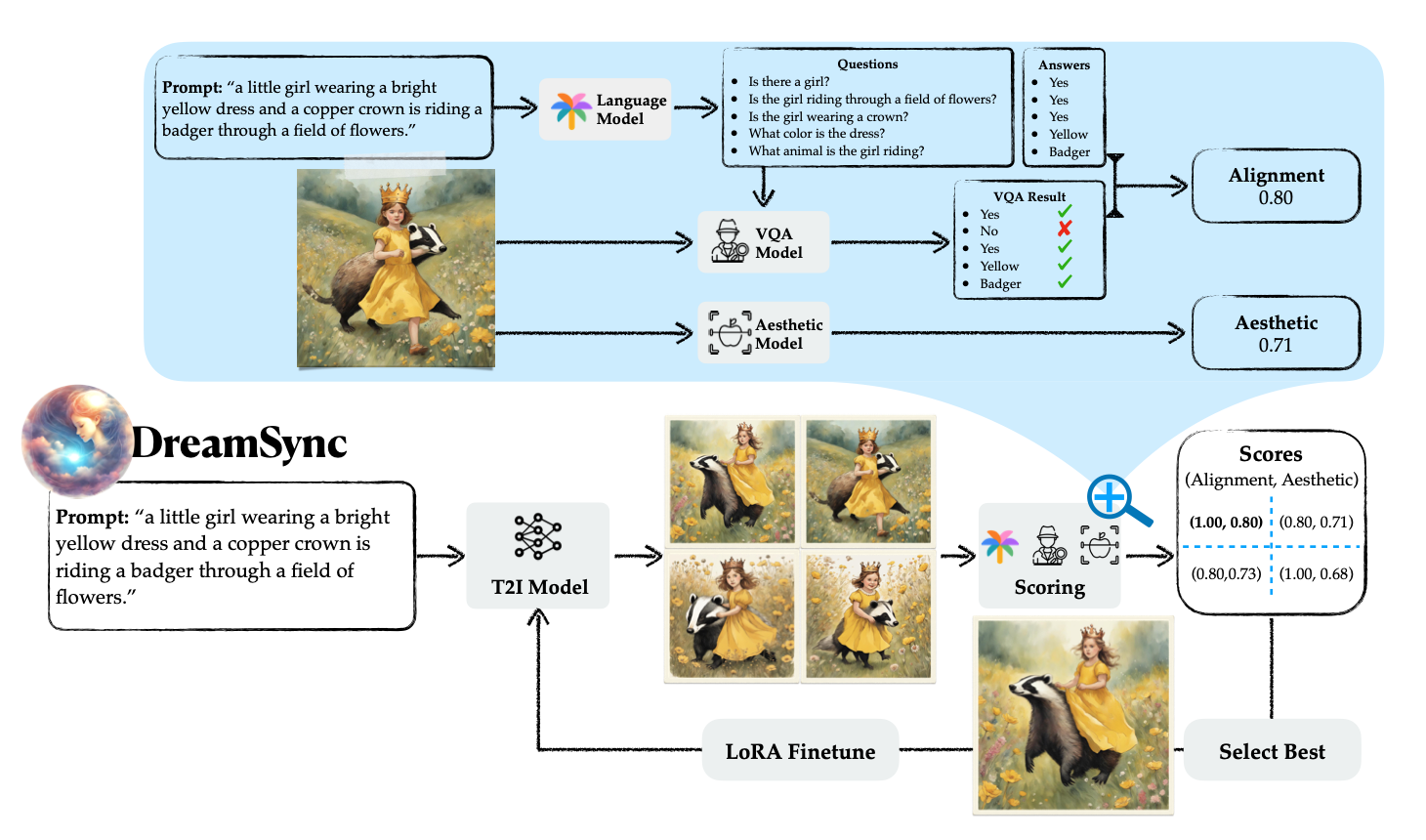

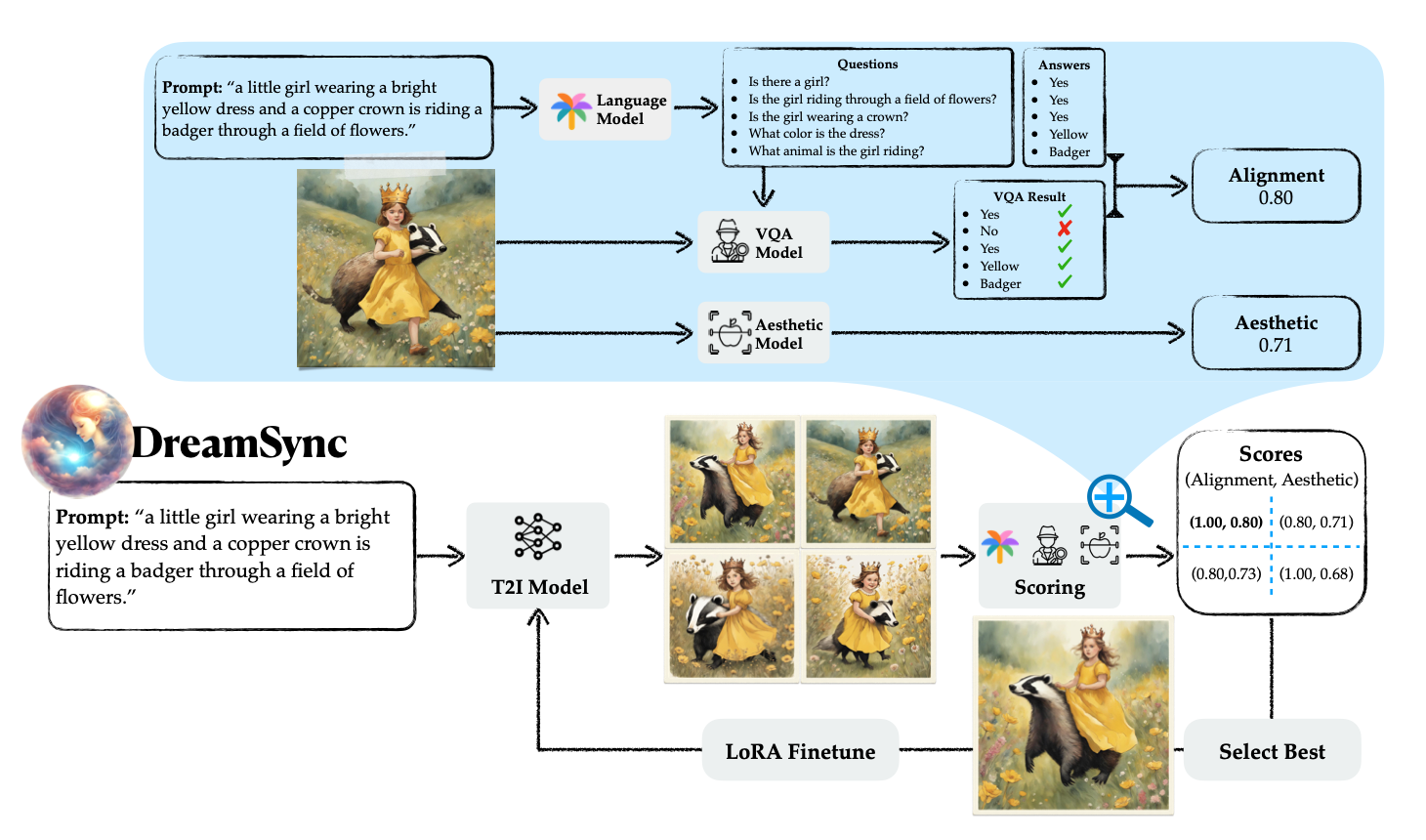

DreamSync: Aligning Text-to-Image Generation with Image Understanding Feedback

Jiao Sun*,

Deqing Fu*,

Yushi Hu* , Su Wang, Royi Rassin, Da-Cheng Juan, Dana Alon, Charles Herrmann, Sjoerd Steenkiste,

Ranjay Krishna, and

Cyrus Rashtchian In Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2025

*Equal Contribution

2024

-

NeurIPS

-

NeurIPS

Pre-trained Large Language Models Use Fourier Features to Compute Addition

In Conference on Neural Information Processing Systems (NeurIPS), 2024

-

COLM

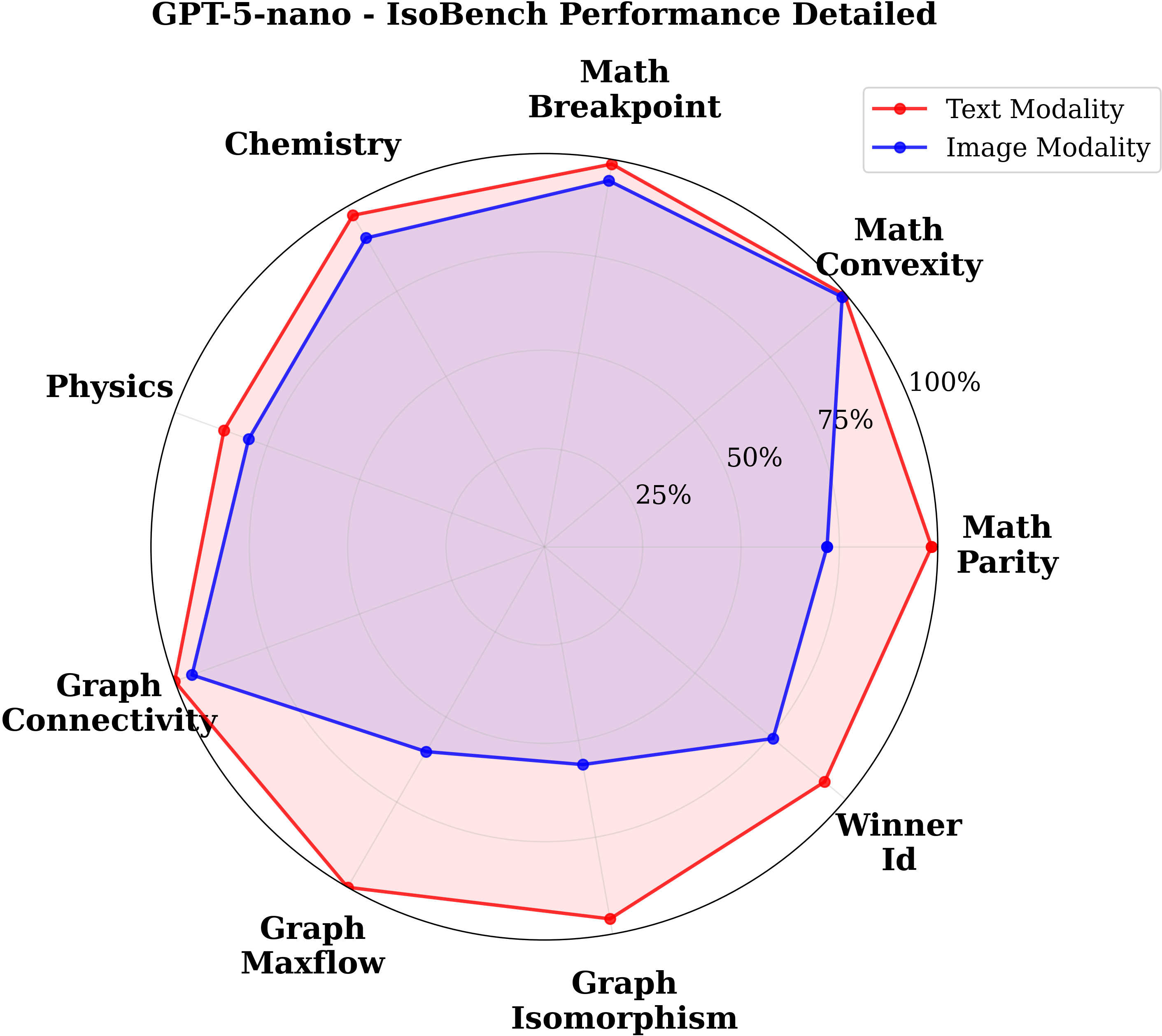

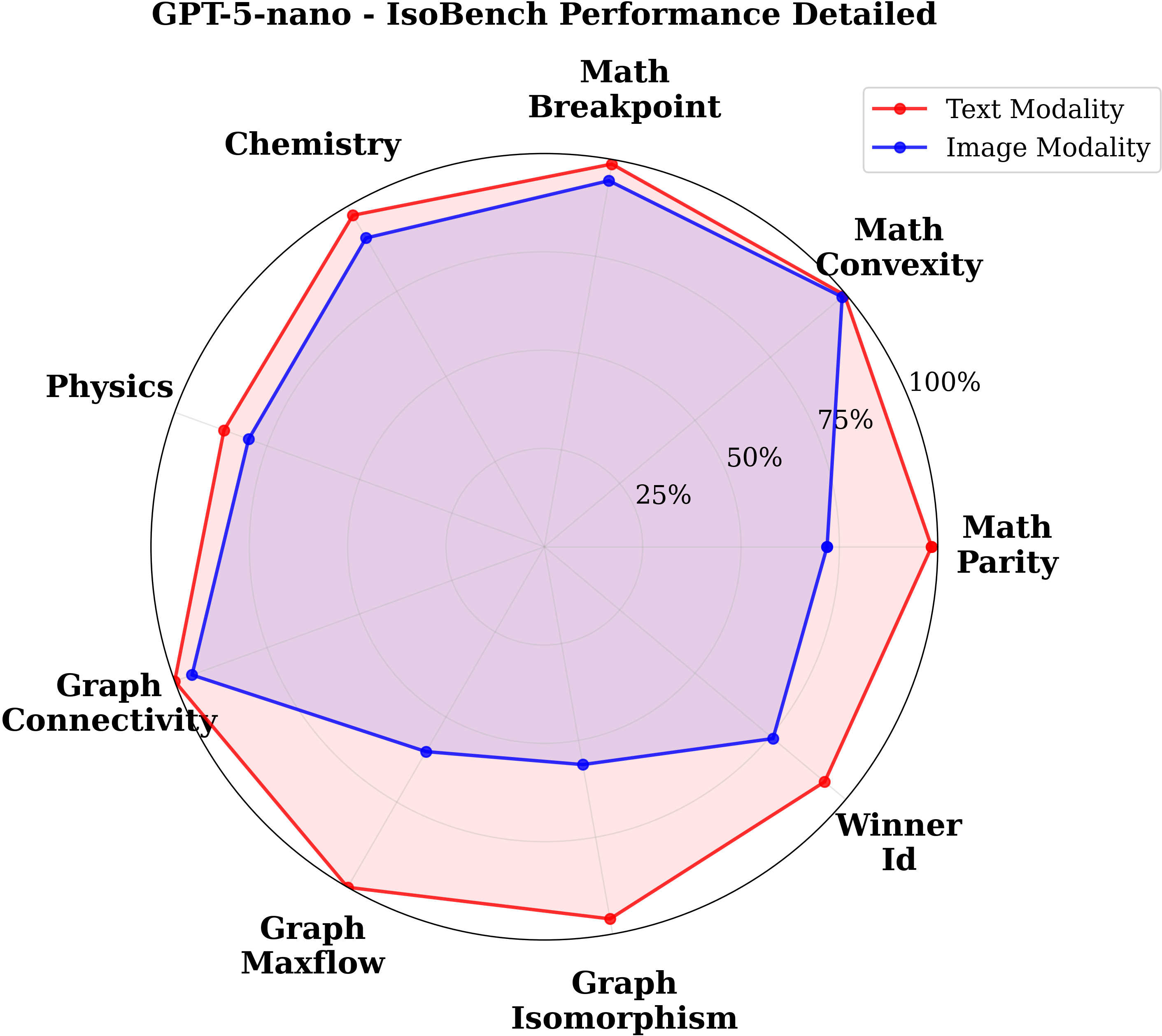

IsoBench: Benchmarking Multimodal Foundation Models on Isomorphic Representations

In Conference on Language Modeling (COLM), 2024

*Equal Contribution

2023

-

EMNLP

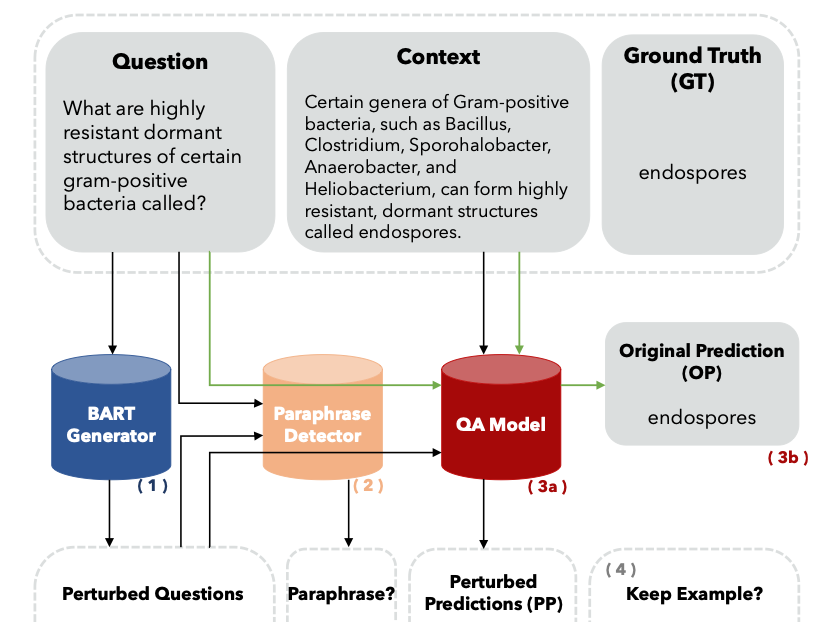

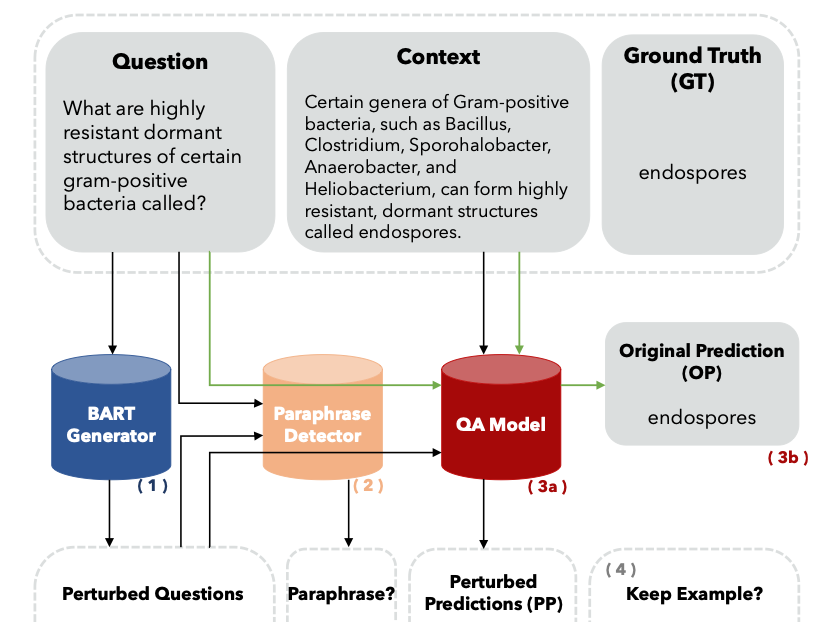

SCENE: Self-Labeled Counterfactuals for Extrapolating to Negative Examples

In Conference on Empirical Methods in Natural Language Processing (EMNLP), 2023

When Do Transformers Learn Heuristics for Graph Connectivity?In arXiv, 2025*Equal Contribution

When Do Transformers Learn Heuristics for Graph Connectivity?In arXiv, 2025*Equal Contribution

VisualLens: Personalization through Visual HistoryIn Conference on Neural Information Processing Systems (NeurIPS), 2025

VisualLens: Personalization through Visual HistoryIn Conference on Neural Information Processing Systems (NeurIPS), 2025 TLDR: Token-Level Detective Reward Model for Large Vision Language ModelsIn International Conference on Learning Representations (ICLR), 2025

TLDR: Token-Level Detective Reward Model for Large Vision Language ModelsIn International Conference on Learning Representations (ICLR), 2025 Transformers Learn Low Sensitivity Functions: Investigations and ImplicationsIn International Conference on Learning Representations (ICLR), 2025*Equal Contribution

Transformers Learn Low Sensitivity Functions: Investigations and ImplicationsIn International Conference on Learning Representations (ICLR), 2025*Equal Contribution DreamSync: Aligning Text-to-Image Generation with Image Understanding FeedbackIn Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2025*Equal Contribution

DreamSync: Aligning Text-to-Image Generation with Image Understanding FeedbackIn Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2025*Equal Contribution Pre-trained Large Language Models Use Fourier Features to Compute AdditionIn Conference on Neural Information Processing Systems (NeurIPS), 2024

Pre-trained Large Language Models Use Fourier Features to Compute AdditionIn Conference on Neural Information Processing Systems (NeurIPS), 2024

Dataset

Dataset

Code

Code